Imagine asking an AI system, “Book me a flight, update my calendar, and notify my manager,” and watching it carry out everything by itself. That is the power of agentic AI, capable of functioning as self-reliant assistants that use tools, workflows, data, and large language models (LLMs) to complete tasks. As businesses evolve beyond basic chatbots, the trend of “tool stacking” has accelerated in 2025.

This article from PIT Solutions explores how developers can now integrate RPA bots, retrieval systems, LLMs, and other tools into seamless workflows using AI orchestration frameworks.

What Is AI Tool Stacking In Enterprise AI Solutions?

When different AI services such as LLMs, knowledge bases, APIs, and automation scripts are combined to create intelligent workflows, the process is called AI tool stacking.

By 2025, instead of manually coordinating each service, developers automate generative models using layers that dynamically interact among models, data pipelines, RPA bots, and rules engines based on the workflow’s needs.

When a complex objective is received, a central LLM (such as GPT-4, Claude, or Gemini) employs frameworks like LangChain or Microsoft AutoGen to break it into smaller steps and then distribute calls to retrieval systems, RPA bots, or custom APIs. The LLM acts as the brain or conductor making the decisions, while the tools handle the specific tasks as per the requirements.

Key Components of an Agentic AI Stack

Agentic AI systems are composed of several layers that work together:

Large Language Models (LLMs): These form the brain of the AI system. Models like GPT-4, Claude, Gemini, or open-source options such as Mistral and LLaMA 3 can interpret natural language, understand intent, and trigger appropriate actions through function or tool calls. Modern LLMs now support such capabilities natively, making it easier to link them with external tools.

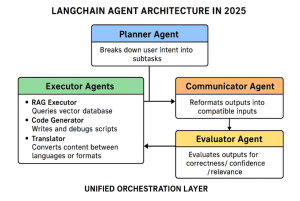

Orchestration & Agent Frameworks: This layer manages how all processes are coordinated, errors corrected, and agents connected. Frameworks such as LangChain, CrewAI, AutoGen, and Haystack enable multi-step or multi-agent workflows. One example is LangChain 2025, where the roles of planner, executor, communicator, and evaluator are combined under one layer to handle tool routing, memory, parallel execution, and error recovery. Platforms like Vertex AI Agent Builder and Google’s Agent Development Kit (ADK) provide managed enterprise solutions for these functions. Agent collaboration can follow standards such as the Agent2Agent protocol, while custom behaviors are implemented through ADK (through Python SDK).

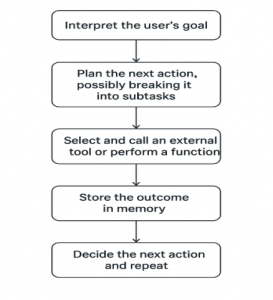

Memory & Retrieval Systems: To maintain context over time, the system uses memory to store retrievable content, a process known as Retrieval-Augmented Generation (RAG). Vector databases (Pinecone, Weaviate, Qdrant, or FAISS) store information that LLMs reference to produce grounded responses. Short-term logs (e.g., in Redis) may combine long-term storage in vector databases, allowing agents to recall previous steps or facts that improve response relevance.

Tools and APIs: External tools such as SaaS APIs (Jira, Slack, Salesforce), RPA platforms (Power Automate, UiPath, Zapier), microservices, databases, or code interpreters (Python, shell) are used by agents to execute tasks. These are integrated through frameworks like LangChain’s Tool class or OpenAI’s function-calling, enabling true tool stacking, where the LLM selects the right utility (e.g., a CRM API), generates results, and continues the workflow.

Execution & Hosting Environment: This layer determines where the system gets deployed, on-premises for compliance, in containers (Docker/Kubernetes), or serverless setups (AWS Lambda, Azure Functions). Concurrency, latency, and security are managed through a robust infrastructure. For instance, an enterprise may use cloud GPUs for LLM workloads and Kubernetes clusters for orchestration. By logging events, caching results, and scaling during high demand, the environment keeps agents responsive and stable.

Observability & Monitoring: Since agents act autonomously, continuous monitoring is crucial. Platforms like PromptLayer or LangSmith (from LangChain) support prompt tracing, while monitoring stacks such as OpenTelemetry, ELK/Grafana, Datadog, or Weights & Biases provide performance metrics. These logs help audit behavior, detect loops or hallucinations, and enforce guardrails. For end-to-end traceability, enterprises often integrate agent workflows into MLOps pipelines.

All these layers work in sequence to deliver the desired outcomes. The agent represents the visible interface, while the underlying stack forms the muscles, brain, and nervous system of the AI.

A typical agent flow is:

Emerging Trends and AI Tooling (2025)

LangChain continues to be a leading open-source backbone, assigning planner, executor, and evaluator roles through its chains, memory, and multi-agent orchestration. Google Cloud’s Vertex AI Agent Builder, ADK, and Agent2Agent protocol make agents compatible with RAG and extend their reach to Google Search or Maps. Startups such as CrewAI and Haystack, along with tools like DSPy, Semantic Router, LangSmith, PromptLayer, and Weights & Biases, further enhance orchestration, document processing, and telemetry. Developing agentic systems demands careful handling of state management, supervision, and potential tool failures. Best practices include role-based access control (ensuring agents only perform authorized actions) and human-in-the-loop validation for sensitive or high-impact tasks.

The Future of Enterprise AI: Smarter, Connected, and Scalable

AI tool stacking represents a significant shift in enterprise AI. LLMs no longer function in isolation – they now coordinate entire ecosystems of models, vector stores, APIs, and workflows. This agentic approach creates real value across knowledge management, automation, and customer service, allowing LLMs to take action instead of merely producing text.

For developers, the emphasis is now on connecting systems rather than building standalone models. Using frameworks like LangChain or AutoGen, combined with vector databases, function-calling, and modular system designs, enables flexibility, smooth updates, and efficient API integration.

The visible “agent” is just the surface — beneath it lies a network of models, memory, tools, and orchestration layers that make enterprise AI powerful, adaptive, and ready for future innovation.

Ready to leverage Enterprise AI solutions?

Partner with PIT Solutions to build intelligent, secure, and scalable AI applications designed around your business goals.

Contact us today to bring your ideas to life through advanced digital solutions.